The rapid evolution of cloud computing has ushered in a new era of visual experiences, particularly in the realm of mobile applications. Among the most significant advancements is the emergence of mobile cloud-based picture quality grading, a technology that is reshaping how users consume and interact with digital content. This innovation leverages the power of remote servers to process and enhance visual data, delivering superior image quality without overburdening local hardware. As streaming services, gaming platforms, and social media networks increasingly adopt this approach, understanding its mechanics and implications becomes crucial for both developers and end-users.

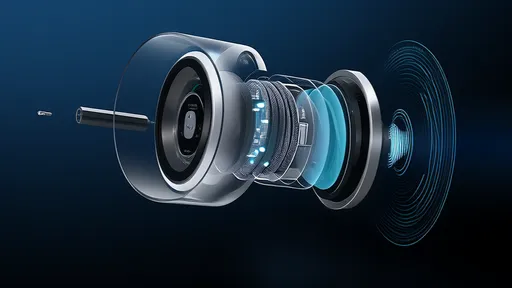

At its core, mobile cloud picture quality grading operates by offloading computationally intensive tasks from smartphones to cloud servers. Traditional devices struggle with real-time rendering of high-resolution content due to thermal constraints and limited processing capabilities. By contrast, cloud-based solutions analyze each frame through sophisticated algorithms that adjust parameters like bitrate, contrast, and color accuracy dynamically. The processed content then streams back to the device in an optimized format tailored to its display specifications and network conditions. This method not only preserves battery life but also ensures consistent performance across diverse hardware configurations.

The implementation of this technology varies across industries, with streaming platforms being early adopters. Services now employ adaptive bitrate laddering powered by cloud analytics to serve the ideal resolution based on a user's connection speed. A viewer on a congested 4G network might receive 720p HDR content with enhanced compression, while another on fiber-optic broadband could enjoy native 4K with expanded dynamic range. What makes this system revolutionary is its contextual awareness—it doesn't merely react to bandwidth fluctuations but anticipates them using machine learning models trained on millions of viewing sessions.

Gaming applications demonstrate even more transformative potential through cloud-based rendering. Titles that would traditionally require flagship GPUs now run on mid-range smartphones by leveraging remote rendering farms. The cloud handles ray tracing, global illumination, and other demanding effects before transmitting gameplay as a video stream. Latency remains the primary hurdle, though advances in edge computing have reduced delays to sub-50 millisecond ranges in optimal conditions. This paradigm shift enables mobile gamers to experience graphics previously exclusive to high-end PCs and consoles, fundamentally altering expectations for portable gaming quality.

Technical challenges persist despite these breakthroughs. Network reliability remains paramount since packet loss or jitter can degrade visual fidelity more severely than native rendering artifacts. Engineers combat this through AI-driven error correction and redundant encoding schemes that prioritize critical visual data. Another obstacle involves the environmental impact of data centers handling exponentially growing video processing loads. Some providers now offset carbon footprints by dynamically routing tasks to servers powered by renewable energy sources during off-peak hours—a practice that may become standard as sustainability concerns intensify.

Consumer behavior is adapting to these technological shifts in unexpected ways. Studies indicate that users now abandon streaming sessions 43% faster when encountering resolution drops compared to pre-cloud grading eras—a testament to heightened expectations. Conversely, engagement metrics soar when platforms consistently deliver superior picture quality, suggesting that visual fidelity increasingly dictates content monetization potential. This trend pressures developers to refine their cloud pipelines continually, fostering an innovation cycle where each incremental improvement yields measurable user retention benefits.

Looking ahead, the convergence of 5G proliferation and cloud-based grading promises near-instantaneous access to theater-grade visuals on handheld devices. Early trials of light field streaming—where cloud servers generate multiple perspective shifts for rudimentary holographic effects—hint at future applications beyond conventional screens. As augmented reality interfaces mature, cloud-processed imagery will likely become the backbone of mobile spatial computing, blending real and virtual environments with unprecedented realism. The mobile cloud picture quality revolution isn't merely enhancing what we see; it's redefining how we perceive digital information altogether.

By /Aug 7, 2025

By /Aug 7, 2025

By /Aug 7, 2025

By /Aug 7, 2025

By /Aug 7, 2025

By /Aug 7, 2025

By /Aug 7, 2025

By /Aug 7, 2025

By /Aug 7, 2025

By /Aug 7, 2025

By /Aug 7, 2025

By /Aug 7, 2025

By /Aug 7, 2025

By /Aug 7, 2025

By /Aug 7, 2025

By /Aug 7, 2025

By /Aug 7, 2025

By /Aug 7, 2025

By /Aug 7, 2025

By /Aug 7, 2025